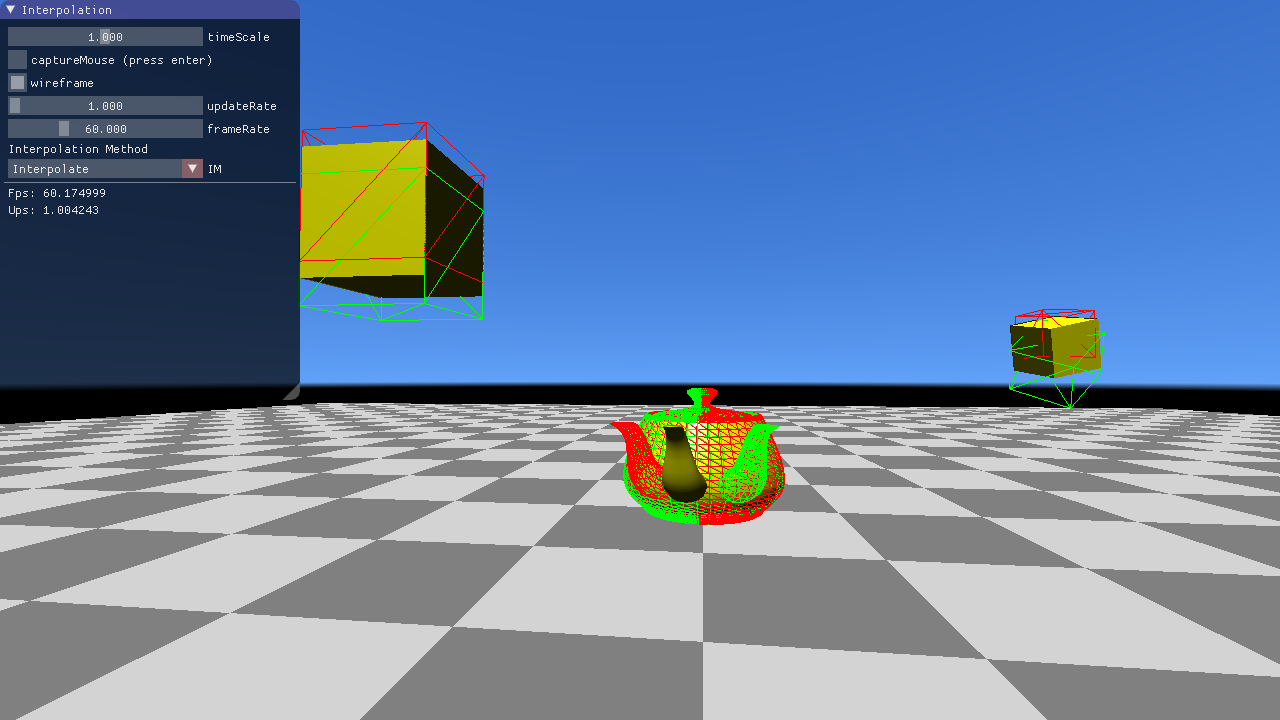

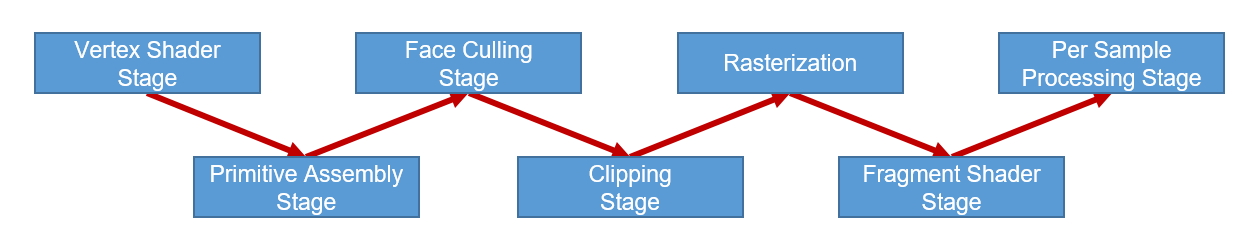

In this task we have a look at a simplified 2D rendering pipeline.

The rendering pipeline is called in RenderingPipeline.Render.

-

The vertices of the geometry are handed to the

Vertex Shader Stage. In this stage the vertex shader is called for every vertex. The result is stored in a vertex stream. -

In the

Primitive Assembly Stagethe primitives are assembled using the result of theVertex Shader Stageand theIBO(Index Buffer Object). You have to implement the assembly stage (RenderingPipeline.PrimitiveAssemblyStage)! Follow the comments in the source code. -

Knowing the primitives, the pipeline gives the possibility to cull unnecessary ones (e.g. if a primitive does not point into the direction of the camera).

This is done in the

Face Culling Stage. You have to implement the primitive culling inRenderingPipeline.LineCulling! -

All primitives that pass the culling test are given to the

Clipping Stage. TheClipping StageimplementsAlpha Clipping in Homogeneous Coordinatesand clips each primitive against the view volume. -

Based on the result of the clipping stage the primitives are drawn using

Rasterization. The rasterizer dehomogenizes the projected vertex positions, applies the viewport transformation, maps depth to $[0,1]$, interpolates attributes and emits fragments that are covered by the primitive. -

The fragments are passed to the

Fragment Shader Stage, where theFragment Shaderis called for every fragment. -

The result of the

Fragment Shader(especially the depth) are passed to thePer Sample Processing Stage. This stage writes the results in the corresponding render target buffers based on the depth of a fragment and the depth test mode. Your task is to implement the depth test inDepthBuffer.TestAndSetFragment. This method compares the old value with the fragment depth value, sets the new depth value if it passes the test and returns the result of the test. Follow the comments in the source code. Note that the depth buffer stores and compares depth values with a low precision fix point representation. You can adjust the precision using the sliders below.

Implement the bold written parts of the pipeline above.

Play a little bit with the sliders that control the near and far plane and the precision of the depth buffer. When does z-Fighting occur?

Write your answer in the text file Basic1.txt.

You can use the mouse button to place the look-at point. The camera position can be set by holding [ctrl] and pressing the mouse button.

In this task, you should deal with x3dom, a HTML-based 3D framework which creates and renders 3D content specified in HTML markups. Because of the hierarchical nature of HTML, this framework is optimal for the creation of a scene graph.

For a short explanation of x3dom, consider the simple image on the right (showing three stacked boxes and a transparent ball) and have a look at the HTML code producing it in index.html.

As you can see, the scene consists of transforms and shapes in a hierarchical way: Transforms can contain both shapes and further

transforms. Shapes contain renderable geometry, such as boxes or spheres, and appearance nodes which specify properties of the rendered geometry, for example color and transparency.

You can drag around the scene using the mouse. Feel free to change the example code to get a feeling for the framework.

Your task is to set up a so-called Koch fractal in 3D using the scene graph capability of x3dom. The fractal scheme is easy. Imagine a cube divided into $3\times3\times3$ smaller cubes. Now take away the eight corner cubes, the cube in the middle and the six cubes in the centers of the sides. The remaining cubes are then subdivided in the same way to produce even smaller cubes, and so on.

Have a look at the code in Basic2.js and complete it to produce a Koch fractal in 3D!

First, you should set up a single box to fill the root transformation created in createScene(). Try to comprehend the scene setup you find in this method and build the nodes

necessary for the creation of a single white box in createBox(). Once you are done, you should see a big white cube in the center of the now dark blue canvas. Feel free to rotate

it and look at it from all sides.

Next, you should implement the function constructChildTransformation() which creates the transformations for the smaller boxes in each step. Follow the instructions

in the according TODO and build the scene graph for the fractal!

Once this works, you should see a shape formed of 12 cubes (the ones remaining after one step of the fractal scheme described above). Now you can use the slider to adjust the number of fractal scheme iterations to further subdivide the cube.